Last month I posted Free the tweets! Export TwapperKeeper archives using Google Spreadsheet, which was a response to the announcement that TwapperKeeper would be removing public access to archives on the 6th January. This solution was limited to archives smaller than 15,000 tweets (although minor tweaking could probably get more). Since then Tony Hirst has come up with a couple of other solutions:

- Rescuing Twapperkeeper Archives Before They Vanish « OUseful.Info

- Rescuing Twapperkeeper Archives Before They Vanish, Redux « OUseful.Info

- Python Script for Exporting (Large) Twapperkeeper Archives By User « OUseful.Info

One of the limits these solutions have is they only collect the data stored on TwapperKeeper missing lots of other juicy data like in_reply_to, location, retweet_count (here’s what a tweet used to look like, now there is more data). Whilst this data is probably of little interest to most people for people like me it opens the opportunity to do other interesting stuff. So here’s a way you can make a copy of a Twapper Keeper archive and rebuild the data using Google Refine.

- You’re going to need a copy of Google Refine and install/run it

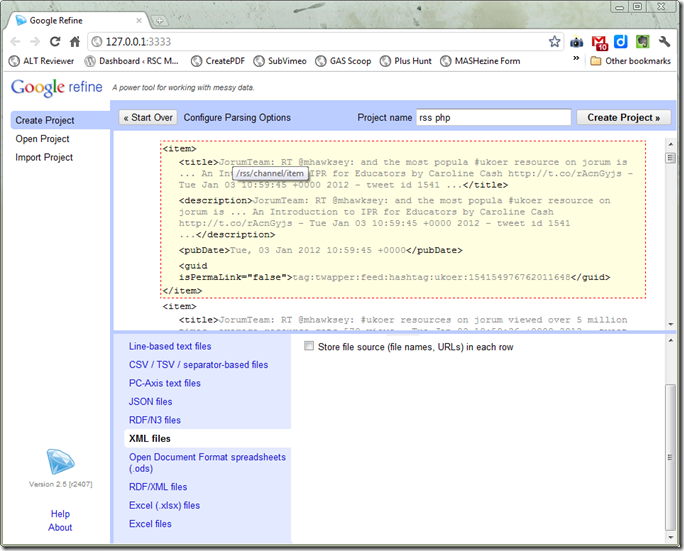

- Visit the Twapper Keeper page of the archive you want. On the page copy the RSS Permalink into the URL box in Google Refine adding &l=50000 to the end e.g. the ukoer archive is http://twapperkeeper.com/rss.php?type=hashtag&name=ukoer&l=50000 and click Next.

- In the preview window that appears switch ‘Parse data as’ to XML files. Scroll the top pane down to hover over the ‘item’ tag and click

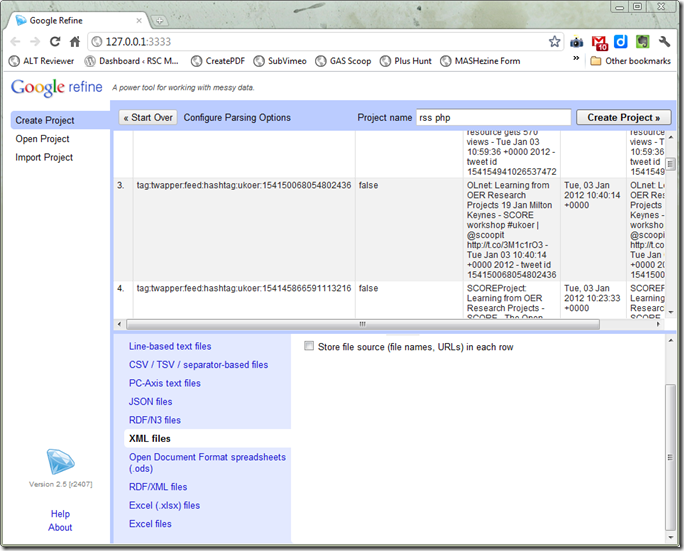

- You should now have a preview with the data split in columns. Enter a Project name and click ‘Create Project’

- From the ‘item – title’ column dropdown select Edit column > Add column based on this column…

- In the dialog that opens enter a New column name ‘id_str’ and the Expression

smartSplit(value," ")[-1](this splits the cell and returns the last group of text) - From the new id_str column dropdown select Edit column > Add column by fetching URLs… and enter a name ‘meta’, change throttle delay to 500 and enter the expression

"https://api.twitter.com/1/statuses/show.json?id="+value+"&include_entities=true"(that’s with quotes), then click OK. [What we’ve done is extract a tweet id and then asked refine to fetch details about it from the Twitter API] - Wait a bit (it took about 4 hours to get data from 8,000 tweets)

Once you have the raw data you can use Refine to make new columns using the expression parseJson(value) then navigate the object namespace. You might find it useful to paste a cell value into http://jsonviewer.stack.hu/ to see the structure. So to make a column which extracts the tweets full name you’d use parseJson(value).user.name

So don’t delay ‘free the tweets’

A Handful of Google Hacks… « OUseful.Info, the blog…

[…] for example, from this post of Martin’s – Free (and rebuild) the tweets! Export TwapperKeeper archives using Google Refine – I realised that Google Refine offers all sorts of import options I hadn’t really […]

Ralf

What does “l=50000” do? I expect it’s a limit on the number of tweets being fetched? Is there an upper limit you are aware of?

Martin Hawksey

Yes and yes. It is the number returned and the max is 50000. You can get more by setting a last date of the last tweet. Tony Hirst used this technique used in the last link in the intro paragraph

Hywel M

I wrote a Python script last week to scrape the full details from a TwapperKeeper archive. Here’s a link: http://dl.dropbox.com/u/15813120/twkp_publiccode.py. When it runs it asks for the name of the archive (e.g. rhanidan) and the output path (e.g. C:\\Python26\\mytestfile.csv). Here’s a text file which will give you an idea of the format of the csv output file you’ll get: http://dl.dropbox.com/u/15813120/rhanidan.csv

That csv file can be read straight into Refine and it will get split into sensibly named columns automatically.

I hope that may be of some use. Thanks for your post (and previous ones). I’ve found them very useful.

@hywelm

Martin Hawksey

@hywelm have you considered taking that script one step further and use something like the tweepy lib to get the meta with py?

Hywel M

So many libraries! I can see how one could. Use a regex like:

twtsrchid = re.compile(r’tweet_id=(\d+)’)

tweetid = twtsrchid.search(dolen).group(1)

tweet_stat = tweepy.api.get_status(tweetid)

Then tweet_stat.text, tweet_stat.user_id etc would give the meta data which one could put in a list or dict and write out.

My solution worked pretty well and given there’s only a couple of days left to grab the archives I’m not going to bother to revise. Thanks for the suggestion though.

Martin Hawksey

Not a py coder myself but looks right. Main thing is you’ve got the raw data so you can get extra if need later.

You might like the 3 links at the beginning of this post by Tony Hirst (ouseful.info) which all use python, the last one with a way to get archives bigger than 50k.

Sarah Marshall

Google Refine doesn’t seem to like fetching more than 100 tweets and is returning an unresponsive page error. http://twapperkeeper.com/rss.php?type=hashtag&name=newsrw&l=100 works but that seems to be the max for me. There are 16,000 tweets in the archive.

I’ve archived the most recent 15,000 into a Google Doc using your very helpful post – so thanks! http://mashe.hawksey.info/2011/12/free-the-tweet/

Martin Hawksey

Hi Sarah – looks like its Refine tripping up on the import or a temporary glitch twapperkeeper side. You might want to open the rss feed in your browser and save the page source and then import the archive into refine as a local file

Martin

Sarah Marshall

Thanks Martin

I think Refine was conflicting with one of my browser extensions. It’s now working fine. Just waiting for the final stage. Thanks again!

Sarah

Social Interest Positioning – Visualising Facebook Friends’ Likes « OUseful.Info, the blog…

[…] discovering via Martin Hawksey that the recent (December, 2011) 2.5 release of Google Refine allows you to import JSON and XML […]

Noch wenige Stunden mit TwapperKeeper! « LIBREAS.Library Ideas

[…] Free (and rebuild) the tweets! Export TwapperKeeper archives using Google Refine […]

Hywel M.

Yes, I’m aware of the good stuff Tony Hearst produces. I’d had a look at his most recent script and tried it. I’d not used feedparser before. If I recall correctly though, it didn’t get the meta data.

What can we learn from the #ukoer Twitter archive [day 24] – MASHe

[…] are obvious issues with this approach, but I think it’s a useful starter for ten]Having grabbed a copy of the #ukoer Twapper Keeper archive using Google Refine before it disappeared I’ve got over 8,000 tweets from 8th March 2010 to 3rd January 2012. […]

magdmartin

Great post and intro on importing xml file. You might want have a look at scraperwiki (https://scraperwiki.com/) as an alternative solution to twapperkeeper.