I’m enrolled on the Learning Analytics and Knowledge (LAK13) which is an open online course introducing data and analytics in learning. As part of my personal assignment I thought it would be useful to share some of the data collection and analysis techniques I use for similar courses and take the opportunity to extend some of these. I should warn you that some of these posts will include very technical information. Please don’t run away as more often than not I’ll leave you with a spreadsheet where you fill in a cell and the rest is done for you. To begin with let’s start with Twitter.

Twitter basics

Like other courses LAK is using a course tag hashtag to allow aggregation of tweets, in this case #lak13. Participants can either watch the Twitter Search for #lak13, or depending on their Twitter application of choice, view the stream there. Until recently a common complaint of the Twitter search is it was limited to the last 7 days (Twitter are now rolling out search for a small percentage of older tweets). Whilst this limit is perhaps less of an issue given the velocity of the Twitter stream for course tutors and students having longitudinal data can be useful. Fortunately the Twitter API (API is a way for machines to talk to each other) gives developers a way to use Twitter’s data and use it in their applications. Twitter’s API is in transition from version 1 to 1.1, version 1 being switched off this March, which is making things interesting. The biggest impact for the part of the API handling search results is the:

- removal of data returned in ATOM feed format; and

- removal of access without login

This means you’ll soon no longer to be able to create a Twitter search which you can watch in an RSS Feed Aggregator like Google Reader like this one for #lak13.

All is not lost as the new version of the API still allows access to search results but only as JSON.

JSON (pron.: /ˈdʒeɪsən/ jay-sun, pron.: /ˈdʒeɪsɒn/ jay-sawn), or JavaScript Object Notation, is a text-based open standard designed for human-readable data interchange – http://en.wikipedia.org/wiki/JSON

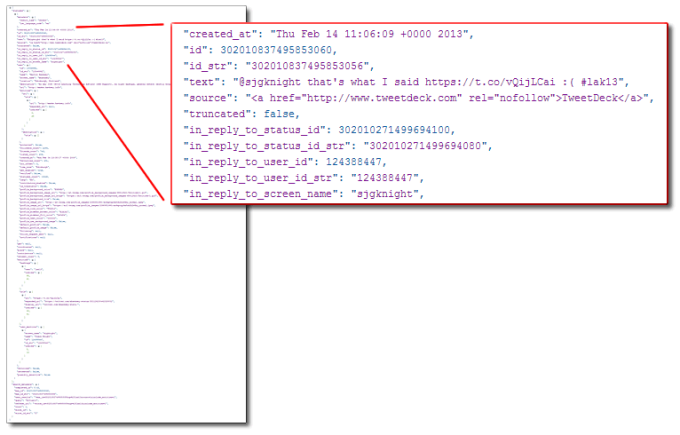

I don’t want to get to bogged down in JSON but basically it provides a structured way of sharing data and many websites and web services will have lots of JSON data being passed to your browser and rendered nicely for you to view. Let’s for example take a single tweet:

Whilst the tweet looks like it just has some text, links and a profile image underneath the surface there is so much more data. To give you an idea highlighted are 11 lines from 130 lines of metadata associated with a single tweet. Here is the raw data for you to explore for yourself. In it you’ll see information about the user including location and friend/follower counts; a breakdown of entities like other people mentioned and links; and ids for the tweet and in reply to.

One other Twitter basic that catches a lot of people out is the Search API is limited to the last 1500 tweets. So if you have a popular tag with over 1500 tweets in a day, at the end of the day only the last 1500 tweets are accessible via the Search API.

Archiving tweets for analysis

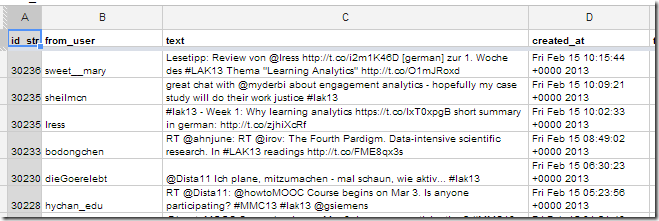

So there is potentially some rich data contained in tweets, but how can we capture this for analysis? There are a number of paid for services like eventifier that allow you to specify a hashtag for archive/analysis. As well as not being free the raw data isn’t also always available. My solution has been to develop a Google Spreadsheet to archive searches from Twitter (TAGS). This is just one of many other solutions like pulling data directly using R and Tableau the main advantage with this solution for me is I can set it up and it’s happy to automatically collect new data.

Setting this up to capture search results from #lak13 gives use the data in a spreadsheet.

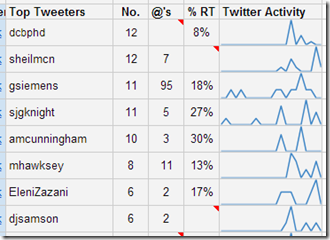

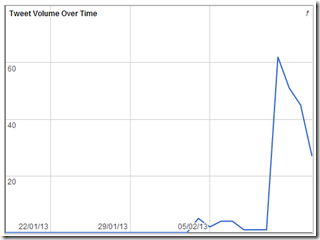

This makes it easy to get overviews of the data using the built-in templates:

… or, as I’d like to spend the rest of this post, quickly looking at ways to create different views.

As you will no doubt discover using a spreadsheet environment to do this has pros and cons. On the plus side it’s easy to use built-in charts and formula to analyse the data, identifying queries that might be useful for further analysis. The downside is you are limited in the level of complexity. For example, trying to do things like term extraction, n-grams etc is probably not going to work. All is not lost as Google Sheets makes it easy to extract and consume the data in other applications like R, Datameer and others.

Using Google Sheets to import and query data

I’ve got a post on Feeding Google Spreadsheets: Exercises in using importHTML, importFeed, importXML, importRange and importData if you want to learn about other import options, but for now we are going to use importRange to pull data from one spreadsheet into another.

If you open this spreadsheet and File > Make a copy it’ll give you a version that you can edit. In cell A1 of the Archive sheet you should see the following formula =importRange(“0AqGkLMU9sHmLdEZJRXFiNjdUTDJqRkNhLUxtZE5FZmc”,”Archive!A:K”)

What this does is pull the first couple of columns from this sheet where I’m already collecting LAK13 tweets (Note this techniques doesn’t scale well, so when LAK starts hitting thousands of tweets you are better doing manipulations in the source spreadsheet than using importRange. I’m doing it this way to get you started and try some things out).

FILTER, FREQUENCY and QUERY

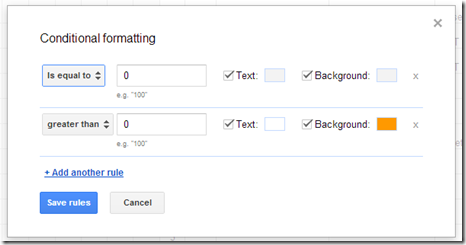

On the Summary sheet I’ve extended the summary available in TAGS by including weekly breakdowns. The entire sheet is made with a handful of different formula used in slightly different ways with a dusting of conditional formatting. I’ve highlighted a couple of these:

- cell G2

=TRANSPOSE(FREQUENCY(FILTER(Archive!E:E,Archive!B:B=B2),S$15:S$22))- FILTER – returns an array of dates the person named in cell B2 has made in the archive

- FREQUENCY – calculates the frequency distribution of these dates based on the dates listed in S15:S22 and returns a count for each distribution in rows starting from the cell the formula is in

- TRANSPOSE – converts the values from a vertical to horizontal response so it fills values across the sheet and not down

- cell P2

=COUNTIF(H2:O2,">0")- counts if the values in row 2 from column H to O are greater than zero giving number of weeks the users has participated

- cells H2:O – conditional formatting

- cell B1

=QUERY(Archive!A:B," Select B, COUNT(A) WHERE B <> '' GROUP BY B ORDER BY COUNT(A) desc LABEL B 'Top Tweeters', COUNT(A) 'No.'",TRUE)- QUERY – allows you to use Google’s Query Language which is similar to SQL used in relational databases. In the example using the data source as columns A and B in the archive sheet we select columns B (screen name of tweeter) and count of A (could be any other column with a unique value) where B is not blank. The results are grouped by B (screen name) and ordered by count. The query also renames the columns.

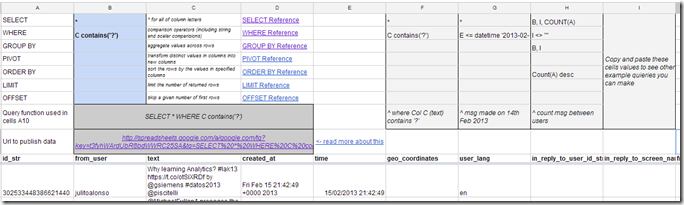

QUERY Out

To give you some examples of possible queries you can use with data from Twitter in the spreadsheet you copied is a Query sheet with some examples. Included are some sample queries to filter tweets with ‘?’, which might indicate questions (even if rhetorical), time based filters and counts of messages between users.

Tony Hirst has written more about Using Google Spreadsheets as a Database with the Google Visualisation API Query Language, which includes creating queries to export data.

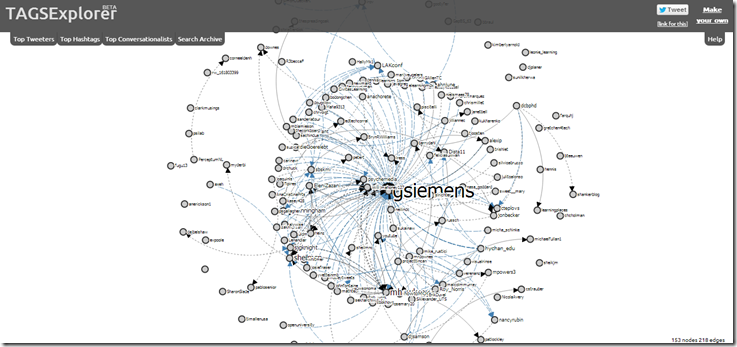

Other views of the data

The ability to export the data in this way opens up some other opportunities. Below is a screenshot of a ego/conversation centric view of #lak13 tweets rendered using the D3 javascript library. Whilst this view onto the archive is experimental hopefully it illustrates some of the opportunities.

Summary

Hopefully this post has highlighted some of the limitations of Twitter search, but also how data can be collected and the opportunities to rapidly prototype some basic queries. I’m conscious that I have provided any answers about how this can be used within learning analytics beyond the surface activity monitoring but I’m going to let you work that one out. If you want so see some of my work in this area you might want to check out the following posts:

- First look at analysing threaded Twitter discussions from large archives using NodeXL #moocmooc

- Any Questions? Filtering a Twitter hashtag community for questions and responses [situational awareness] #CFHE12

- CFHE12 Analysis: Summary of Twitter activity

- Integrating Google Spreadsheet/Apps Script with R: Enabling social network analysis in TAGS

- Using Google Spreadsheets as a data source to analyse extended Twitter conversations in NodeXL (and Gephi)

- TAGSExplorer: Interactively visualising Twitter conversations archived from a Google Spreadsheet

#LAK13: Recipes in capturing and analyzing data – Canvas Network Discussion Activity Data Jisc CETIS MASHe

[…] my last post I looked at the data available around a course hashtag from Twitter. For this next post I want to start looking at what’s available around the Canvas Network […]

Fridolin Wild

Hi Martin, I added a cRunch tutorial on how to pull this archive and create a bubble chart illustrating activity per user per course week.

See here:

http://crunch.kmi.open.ac.uk/people/~fwild/services/lak13twittermonitor-tutorial.Rmw

Or for the ‘chart only’ version here:

http://crunch.kmi.open.ac.uk/people/~fwild/services/lak13twittermonitor.Rmw

It’s not pulling live data (but using a stored, static version), so don’t worry about the load it might produce 😉

Thanks for your great community effort in setting up this archive!

Best,

Fridolin

Martin Hawksey

Hi Fridolin,

Thanks for the links. I let Google worry about the load 😉 Great to see how the numbers are crunched. On the second link for some reason it’s throwing some errors?

Cheers,

Martin

Fridolin Wild

That bug is fixed now — finishing up things yesterday I was a bit too quick in removing the line calculating the ndays (number of days of the course). Funny: it seems that rApache is not fully protecting memory and variables can ‘leak’ over from the Rstudio thread to the rApache R thread. I guess it’s meant to be a feature, but in this case it’s quite annoying.

Fridolin Wild

and I have updated the second script (with just the image) to fetch the data now live. Let’s have Google worry about load, you’re right 🙂