Back in February I was announced as one of the featured artists at Domains19. Jim has captured the moment in the announcement post:

The idea for having Martin speak at Domains was a happy accident while speaking with both Maren Deepwell and Martin about ideas for OER19. He had done something wild with a Raspberry Pi and facial recognition at DevFest London in 2017, and when we were talking about the conference themes around Domains19 he linked to his post on the talk. We were immediately sold, this kind of Minority Report-esque installation that explores the ethical boundaries of the tech we have come to take for granted is exactly what we were hoping Domains19 would manifest.

That gave me 8 months to ponder and plot. You can read about the result of all that pondering and plotting in #Domains19: Minority Report – One Nation Under CCTV. In this post I’d like to share some of my Domains journey, in particular for this post I wanted to share some of the video production pieces I put together and how they were made.

Minority Report Rebooted

If your not familiar with the Minority Report one of the main plot points is how in 2054 public spaces use iris recognition to track the movements of the general populous as well as being used to push targeted advertising. For the start of my talk I wanted to plant the seed of how a national surveillance system is used for law enforcement and advertising … no separation of ad. and state, at the same time I wanted to suggest that wearing sunglasses could defeat an iris recognition system.

There were a couple of callbacks I made to this clip during my talk including how so called ‘burka bans’ actually are often used to legislate against face coverings in public spaces, how facial recognition systems can be defeated even when wearing sunglasses and how facial recognition can be used to oppress the innocent.

My Minority Report Rebooted clip is embedded below. For the edit I wanted to use a scene where the iris recognition system is explained and then when it is used for targeted advertising as John Anderson played by Tom Cruise goes to catch a train.

With Reclaim Video in mind the way I thought I could skip through to the clips I needed was to spoof a VHS fast forward. I did briefly look at DaVinci Resolve and found some tutorials on VHSifying clips but after a brief try decided it was going to be too much of a curve for the time I had. Instead as a long time user of Corel VideoStudio (I think I even had a copy when it was developed by Ulead) I figured I could do something similar to make the edit.

Having bought Minority Report on DVD and ripping it with Handbrake I dropped into VideoStudio and cut the clips I needed. For the scenes between clips I used Speed/Time-lapse to compress the playback. For some of the clips the maximum speed wasn’t quick enough so for a couple I speeded them up as much as I could, exported and re-imported and compressed them more. For a tape spooling effect I used Christopher Huppertz ‘VHS Glitch’ clip as an overlay, dropped in a fast forward image applying an ‘earthquake’ special effect and a spooling sound clip.

For my cameo I spotted there was a scene where it cuts to someone reading a newspaper and recognising ‘John’ on the train. Thanks to the regular live streaming I do for ALT I’ve lots of video equipment knocking around my house but no video studio or, most importantly, a green screen. My hack was to show a static green image on one of our TVs and then video myself in front of it.

My aim wasn’t to get a perfect shot as I wanted to make sure it was obvious this wasn’t part of the original movie. As the clip of the ‘man with newspaper’ was also too short there is also lots of hacky looping and slow motion playback which I think adds to the surrealness.

VJing with OBS (the art of surveillance)

In the early conversations with Jim about my talk one of the things I wanted to explore was doing a live production of my talk which would make it easier for me to integrate live demos, video, music with more traditional slides. I first came across the idea of VJing an academic presentation from Tony Hirst back in 2010 who in turn had taken inspiration from Brian Lamb, Scott Leslie, Jim Groom and Alan Levine. My plan was to use OBS Studio to create some scenes I could switch between during my talk. One of my original ideas was that each scene would be its own chapter which would start with its own soundtrack. In the end time pressure meant I had to scale back on most of my original ideas

Whilst I probably fell short of a true VJ experience I’d like to think I created something that was interesting and added to the mood of my talk. In the end I was able to embed several real-time surveillance feeds shown in the picture sava saheli singh shared on Twitter:

I mean… #Domains19 pic.twitter.com/OKpm6HAQIL

— 🖤 dr. savasavasava 🖤 (@savasavasava) 11 June 2019

I’m glad sava caught this shot because in the pressure to get everything setup and with a few technical glitches of my own making it was probably two thirds through my talk that I realised that rather than my indented ‘experience’ I had just delivering slides and videos forgetting to switch back to the multifeed scene full screen.

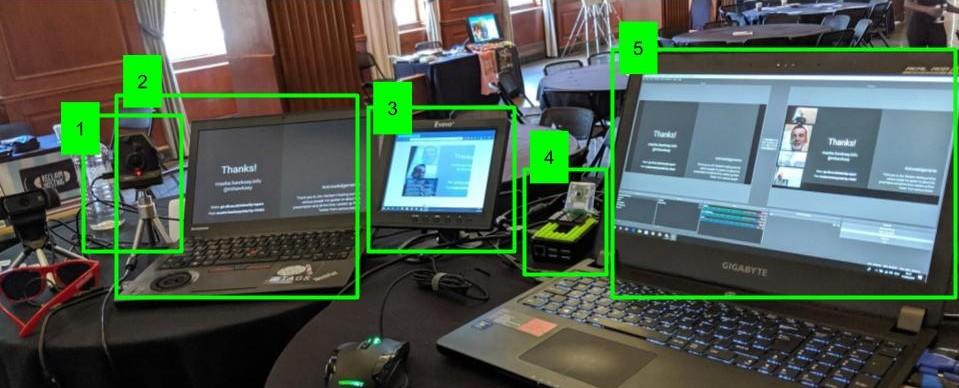

This is probably one of the biggest challenges of doing a solo VJ set, there being a hell of a lot going on. To give you a sense here’s what it looked like from my side:

Briefly walking you through this setup in the pic [1] Zoom Q2n which is used for a wide shot and mic; [2] laptop with slides and some live demos; [3] external monitor used with Raspberry Pi [4] and as a second display for the other laptop [5] which had OBS Studio running. Not shown in this pic is the two capture boxes I used to get a video source from the presentation laptop [2] and Pi [4].

The picture below taken by Tom Woodward captures the live surveillance feeds I used during my talk. Starting from the top image this shot from the Pi [4] which has been put through OpenCV which detects and highlights any faces. The next image down is from the same camera shot from the Pi but has been processed by the Kairos service to include demographic data and using the face detection data to crop the shot. Second from bottom is the shot from the Zoom Q2n cam [1] which has an animated gif overlay and colour correction filters applied in OBS Studio. The bottom image is taken from the webcam on the OBS Studio laptop which is processed by the Google Vision API, which you can try on this page.

So whilst I didn’t hit all the notes I was aiming for I really enjoyed the creative process of piecing together both the OBS Studio setup and Minority Report Rebooted clip. Whilst it’s unlikely that I’ll ever need to go through the complexities of recreating myself surveillance setup used for my Domains talk knowing I can use my little Zoom Q2n as a video and audio source for OBS Studio means I’ll more than likely revisit this to record future presentations I do and as long as there are no glitches I should get a complete recording ;s